12 Movies You Had No Idea Were Shot On Virtual Sets

It's no secret how large a role computers play in movies, not just today but going back to at least the 1990s. But exactly how computers figure into filmmaking has changed quite a bit from the early days of a green screen and awkward CG effects. In recent years, new technology has emerged that has drastically changed the way movies are made, and it's become a lot more prevalent than you may realize.

Based on the type of technology that has been used for video games for decades now, virtual productions in filmmaking allow for deeper immersion and more on-the-fly flexibility than traditional green screen implementation. In a virtual production, screens can completely surround a physical set and can either supplement existing physical objects or completely build the entire image within the computer. It also allows the crew to manipulate things in real-time and make changes at the moment for actors to respond to, rather than a green screen which isn't added until the actor has already done their parts or the old-school method of actors acting in front of video footage.

While it was only first put to use in a major way in the early 2010s, the tech has advanced very quickly and is now used in just about every genre beyond the expected sci-fi or superhero fare. It won't be long before it's a common fixture in almost every major Hollywood production — and even some smaller-scale projects.

Oblivion

Though virtual productions on movie sets didn't start being heavily discussed on a mainstream level until the late-2010s, one of the first major uses of the technology for a big Hollywood production came by way of the 2013 sci-fi adventure film, "Oblivion." Set in a post-apocalyptic 2077 where the Earth has become inhabitable and the Moon has been destroyed as a result of an alien invasion, the film stars Tom Cruise in dual roles as two clones of a man named Jack Harper – both technicians working to repair and maintain the drones that attack the aliens who are still scavenging the Earth.

The primary use of virtual sets for "Oblivion" was to represent the film's Sky Tower location. In an interview with Art of VFX, "Oblivion" VFX supervisor Bjørn Mayer revealed that the floors, walls, and ceilings of the towers were physical set pieces, but the surrounding sky and clouds were created using projectors on a wraparound backdrop that allowed the actors to see and react to the backgrounds in real-time, rather than acting with a green screen and having the imagery added in post-production, which was the standard before virtual sets. While plenty of movies and TV shows used some form of rudimentary virtual production before this, "Oblivion" is generally considered the major production to use it in the modern sense.

The Lion King (2019)

There was a lot of talk about how the Disney+ series, "The Mandalorian," relied heavily on virtual sets featuring brand-new technologies that combined the work of multiple effects companies, and even utilized software that had previously been used primarily to make video games. And much of that was spearheaded by series creator Jon Favreau, who was inspired to bring virtual productions to the "Star Wars" universe after having utilized it for his 2019 remake of "The Lion King."

Favreau's "The Lion King" is sometimes erroneously referred to as a live-action remake, and that's due to how indistinguishable the movie's environments are from real life. The assumption was that footage was shot of real-world locations, and then the CG animals were digitally added to it after the fact. In reality, while reference photos were used, the entirety of the movie was built within computers.

Per The Hollywood Reporter, the crew utilized virtual-reality tools to go in and edit shots within the computerized world in real-time, allowing Favreau and his team to move "cameras" around within the virtual set the same way they would on a physical set or if they were actually on location in Africa. This gave the movie's cinematography a much more realistic and lifelike feel than traditional computer-animated films, and further added to the misconception that the movie used actual footage of real jungles and deserts.

Rogue One: A Star Wars Story

"Rogue One: A Star Wars Story" had a lot going on in terms of effects. Beyond the usual large-scale intergalactic battles and the like, several late actors were brought back to virtual life in order to make appearances in the movie as it told the story of the Rebel Alliance's victory over the Empire which sets up the events of "A New Hope." "Rogue One" was also among the first movies to use Industrial Light & Magic's proprietary StageCraft technology that helps to replicate traditional camerawork and cinematography within a virtual set and camera system.

George Lucas went all-in on creating entirely virtual sets, beginning with "The Phantom Menace," but such technology was still hampered by needing to rely on the green screen. Especially in retrospect, it gives the backgrounds in those movies a very stilted feel and doesn't mesh well with the human actors or the physical elements of the scenes, and everything looks very much like people walking in front of green screens. So to be able to incorporate virtual production for the 2010s run of "Star Wars" movies finally accomplished what Lucas was trying to do way back then and the effects in those movies will hopefully hold up better 20 years down the road than the prequel trilogy's effects do today.

The Irishman

When Martin Scorsese set out to make the gangster epic, "The Irishman," the plan was for the movie's leads — Robert De Niro, Al Pacino, and Joe Pesci — to play their characters across multiple decades. This meant that they needed to look like they were in their 30s in earlier scenes of the movie and in their 50s and 60s by the end. Rather than try and achieve the effect with make-up, it was decided that it would be more realistic to use computers instead.

Scorsese also knew that old-school performers like De Niro, Pacino, and Pesci weren't going to want to wear helmet cams or any of the other intrusive things that typically go with face-related effects work. He needed his actors to focus on their performances rather than facilitating digital trickery. So, (per The Hollywood Reporter) ILM got involved and used special cameras to create virtual reproductions of the same sets and locations that the actors were going to be in, which allowed the actors to shoot their scenes in a mostly traditional manner.

Afterward, the effects team had all the data they needed to go in and do all the digital work of aging them appropriately for whatever point in history a particular scene took place in and tweak it all within the virtual camera system. It also allowed for digital reshaping of the actors' bodies to make them thinner when their characters were supposed to be younger, and heavier as they aged.

The Midnight Sky

Based on the novel, "Good Morning, Midnight" by Lily-Brooks Dalton, Netflix's "The Midnight Sky" follows a brilliant but reclusive man named Augustine (George Clooney, who also directed) who has spent years researching the possibility of other habitable planets besides — which becomes especially important once the film flashes forward to a post-apocalyptic future where humanity has been all but wiped out (though not Augustine) and the Earth contaminated by deadly radiation.

Another movie to utilize ILM's StageCraft technology in addition to work from other effects houses, "The Midnight Sky" used a virtual set (per FX Guide) for two specific purposes. First, it was used early in the movie, when wraparound LED screens accomplished the effect of Augustine looking out from an observatory at the vast arctic wilderness that surrounded it. And those screens — still surrounding a physical observatory set — were used later in the film for use in communicating with a spaceship.

In addition to just allowing for a more believable and immersive effect for both the actors and the audience, this technology was also used in part because "The Midnight Sky" was planned to have a wide IMAX theatrical release as well, which didn't pan out as a result of the COVID-19 pandemic, though a few special IMAX screenings of the movie did go ahead toward the end of 2021.

Bullet Train

Prepare to be completely and utterly shocked: "Bullet Train" wasn't filmed on an actual, moving train. Instead, it was shot within a train-shaped set, and effects trickery was applied to give the appearance of the scenery whizzing by outside. All sarcasm aside, while it was probably a given that viewers were just looking at video footage of blurred buildings and lights, it was also easy to assume that the movie's crew just went the green screen route.

Instead, while Ladybug (Brad Pitt) is having to deal with various obstacles on his mission to secure a briefcase aboard the titular high-speed transportation system, the flashes of the Tokyo skyline that are constantly lighting up the train's windows were achieved via 98' LED walls that the physical train set is sitting between (per Befores & Afters). The custom-made screens could either stretch out and run the entire length of the full set of train cars, or they could be wrapped around an individual car when the windows at the ends of a car needed to be shown, and the footage could be adjusted accordingly so it looked like the train was moving toward or away from its destination.

This meant that the director never had to worry about staging scenes in such a way that the edges of a green screen weren't showing, and the action could be shot from literally any angle and not break the illusion that it was taking place on a train speeding through Tokyo.

War for the Planet of the Apes

We've long moved beyond the cheesy-looking masks with mouths that barely move from the classic "Planet of the Apes" movies. The modern series boasts some extremely impressive apes, nearly indistinguishable from the real thing at first glance — beyond the fact that they are talking, of course. As good as they looked in the first two installments of the reboot trilogy, virtual productions came into being following "Dawn of the Planet of the Apes" and really helped to make 2017's "War for the Planet of the Apes" pop visually.

It's one thing to have a couple of apes on screen at any given moment, but "War for the Planet of the Apes" had massive set pieces that saw entire armies of the beasts filling the screen at various points. To accomplish that, the usual method of an actor performing the scenes and then having them turned into an ape later on in post-production didn't cut it. Per FX Guide, virtual sets were utilized that allowed for a much greater number of apes on screen than ever before, and on a scale previously unheard of for this type of film.

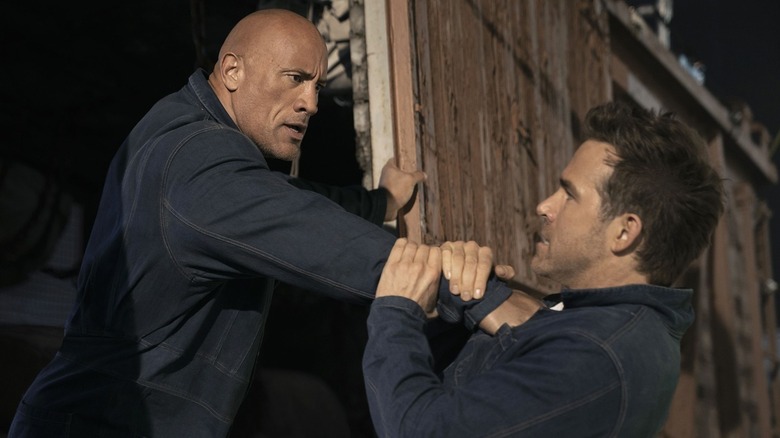

Red Notice

The world of streaming moves quickly, and original movies for streaming platforms tend to be on a tighter schedule than theatrical releases. One way to get films made faster is to use virtual productions, as was done for Netflix's 2021 action comedy, "Red Notice." Starring Dwayne Johnson as an FBI agent chasing down rival art thieves played by Ryan Reynolds and Gal Gadot — though allegiances are constantly shifting and double-crosses come frequently — the movie was a huge hit for Netflix and was the fifth most streamed movie of that year on any platform.

Visual effects company Lux Machina — who also worked on "Oblivion," making them one of the pioneers of virtual sets for movies – went all out for "Red Notice," giving the production multiple LED walls, an LED ceiling, and several smaller mobile screens that could be moved and positioned as needed. This facilitated scenes like the moving train fight between Johnson and Reynolds, Johnson climbing around on the outside of a helicopter, and a scene where Johnson and Gadot are on a boat.

It's easy to see how Netflix was able to secure the very busy trio of stars for two "Red Notice" sequels, given that the films can be shot almost entirely in a single studio and don't require traveling to different sets and locations which requires a much bigger time commitment than virtual productions do.

The Batman

It goes without saying that superhero movies that take place in other dimensions or on alien worlds rely heavily on the use of digitally-created environments. But what is probably more surprising is when one like "The Batman" utilizes virtual sets despite being largely grounded in reality and taking place in a city that is meant to seem as though it can easily exist in real life. Considering it was directed by Matt Reeves, who also helmed the aforementioned "War for the Planet of the Apes," it makes a little more sense that he would use a technology he was already familiar with in order to deliver the most recent cinematic take on the Caped Crusader.

Whereas previous Batman movies either relied heavily on fabricated sets or used a real city like Chicago to stand in for Gotham, for "The Batman," it was decided to construct much of the famed home of Bruce Wayne within virtual sets, as explained in a "The Making Of" YouTube clip released by HBO Max. In addition, virtual sets do away with the need to try and capture certain times of day in the limited amount of time they exist in real life, so a scene that is to take place at dusk with Gotham bathed in beautiful orange hues can take all the time it needs to get it right since virtual sets can have a sunset last as long as it needs to.

Ford v Ferrari

While a fair amount of the driving and even the crashes in the critically acclaimed "Ford v Ferrari" is real, a lot of digital trickery was also implemented in order to achieve all of those pulse-pounding racing and driving scenes. Virtual sets helped to build out the courses, extend the crowds — or sometimes, even completely build them from scratch — and perhaps most importantly, keep everything looking accurate to the time period the movie takes place in.

In an interview with FX Guide, "Ford v Ferrari" postvisualization supervisor, Zack Wong, revealed that they heavily utilized Unreal Engine, a creation tool that has been used to build thousands of video games — starting with its namesake game, "Unreal," in 1998. It allowed the effects team to go in and tweak camera angles and other aspects of the races in real-time, in addition to adding various effects to make the cars appear to be racing in the rain, at dawn, and so on. Unreal Engine also played a large part in giving everything an appropriately stylized look, allowing it to more directly match the traditional footage in a way that would've proven much more difficult with regular digital effects.

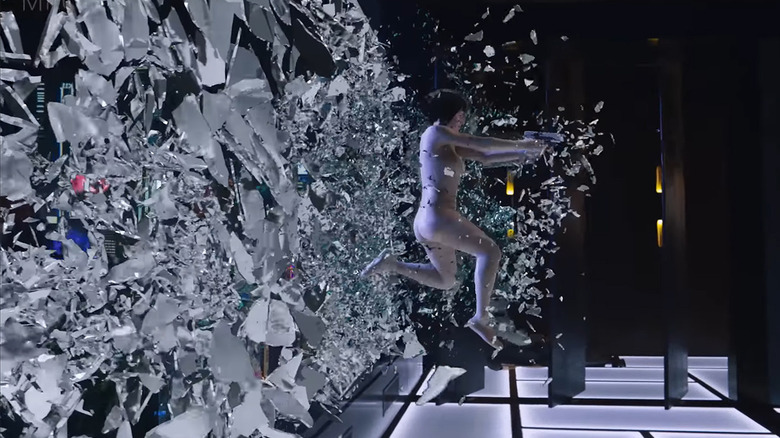

Ghost in the Shell (2017)

Reviews for the 2017 live-action remake of "Ghost in the Shell" were generally negative on the whole, though the two things that were universally praised by most people were Scarlett Johansson's performance — her unfortunately whitewashed casting notwithstanding — and the jaw-dropping visual effects. Based on the manga series and anime adaptations of the same name, the movie takes place in a near future version of Japan where humans and robots have started to become indistinguishable from one another.

Like any live-action movie that seeks to replicate the elaborate visuals of manga and anime, "Ghost in the Shell" relies very heavily on computer and digital effects. One of the defining characteristics of the world of the film is its futuristic cityscape full of cyberpunk skyscrapers and holographic advertisements called Solograms. That's where virtual production most heavily came into play, with nearly 400 Solograms created by effects company MPC who then scattered them around the virtual city that was used as the movie's setting (per CNet). The scale of the 3D images created for the movie was unprecedented at the time, so much so that MPC had to develop an entirely new set of software tools specifically for "Ghost in the Shell" just to handle the monumental task.

Top Gun: Maverick

It needs to be said right off the bat that the majority of the death-defying aerial maneuvers that are shown in "Top Gun: Maverick" are 100% real. A big part of why the movie looks as great as it does, and why it got the acclaim that it did, is because it relied on very little digital trickery and was instead an old-school spectacle where what you are seeing is what is actually happening. It was a major point of pride in the marketing of the movie, as it should've been, and the results speak for themselves.

But that's not to say that "Maverick" didn't get plenty of computer assistance. It's just that, in this particular case, it was largely used to bring together and properly put on screen the real footage that was shot, rather than completely fabricated things from scratch with digital effects. In particular were the close-ups and the cockpit views, as revealed by "Maverick" production VFX supervisor Ryan Tudhope in an interview with AWN, as it would've been nearly impossible to mount a camera onto a fighter jet that is screaming through the air and doing elaborate aerial maneuvers that could still cleanly catch the pilot's facial expressions and the like. That was one of the ways virtual sets most prominently came into play on "Maverick," in addition to just basic cleanup of existing shots and footage.