Oppenheimer's Christopher Nolan Compares The Rise Of AI To The Creation Of The Atomic Bomb

Artificial intelligence has dominated conversations across society and every industry — including entertainment — for the past several years. And as it turns out, even Christopher Nolan's 2023 Oscar winner for best picture, "Oppenheimer," also touches on the subject of the perils of autonomous machinery, albeit indirectly.

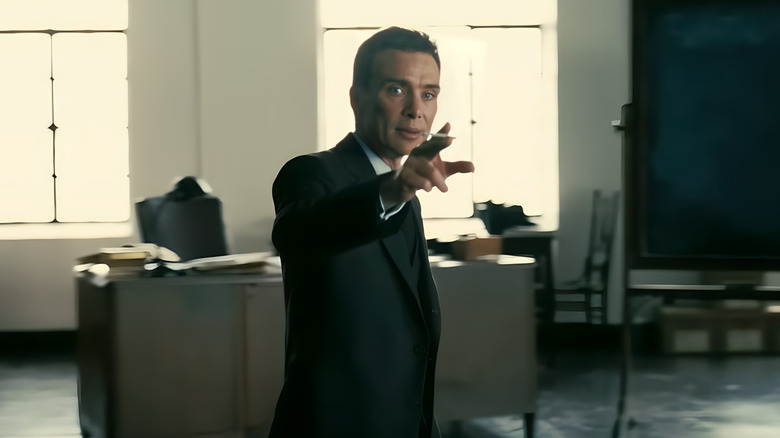

The movie centers on the life of J. Robert Oppenheimer, the theoretical physicist who led the Manhattan Project to develop the first atomic bomb. The subject matter may be pre-computer, but nevertheless, it grapples with the same struggle of how to treat emerging technology. It's a fact that isn't lost on the film's director, either. In an interview with Yahoo!, the British-born filmmaker called the connection out, saying, "Artificial intelligence researchers refer to the present moment as an 'Oppenheimer moment.'" Nolan added that AI researchers are able to look at the Oppenheimer story "for some guidance as to what is their responsibility — as to what they should be doing."

The threat of the two technologies, neatly set a century apart, isn't the only parallel. The specific act of pioneering and blindly testing both underdeveloped fields has its connections, too. For instance, when the first atomic bomb went off, there was a very real concern that it could ignite the atmosphere of the Earth and destroy the entire planet in one go. There are similar hesitations about AI suddenly "gaining cognition" and turning on its creators before they even realize what's happening.

Ethics and the ultimate expression of science

The struggle with things like nuclear power and artificial intelligence is that it isn't all bad. On the contrary, while Oppenheimer's crew was deliberately trying to end a war, the development of controlled nuclear fission reactions was more than just a military interest. It had genuine potential for good, which is why Nolan referred to the effort as "the ultimate expression of science... which is such a positive thing, having the ultimate negative consequences."

Those who work on exploring and applying the theories behind major scientific concepts typically aren't villains, they're scientists doing their job and passionately pursuing their field — just like any other professional. The problem is balancing that "ultimate expression" of one's craft with the ethical issues that it can create along the way. It's this struggle that led Yoshua Bengio, considered one of the godfathers of AI, to publish an op-ed in the Economist warning about the perils of artificial intelligence. After pointing out the incredible capacity for AI and the potential for exponential advancements in superhuman future iterations, Bengio points out how close we may be to realizing that possibility.

"When can we expect such superhuman AIs?" Bengio asks, "Until recently, I placed my 50% confidence interval between a few decades and a century. Since GPT-4, I have revised my estimate down to between a few years and a couple of decades, a view shared by my Turing award co-recipients." The computer scientist added, "Are we prepared for this possibility? Do we comprehend the potential consequences? No matter the potential benefits, disregarding or playing down catastrophic risks would be very unwise."

Oppenheimer examines the issue...but doesn't offer answers

The potential threat of AI is very real — even if it's still mostly theoretical. So was the atomic bomb until the Trinity Test. And at that point, there was no turning back. In fact, when you look at the whole modern struggle with AI development in the context of Oppenheimer's story, it also becomes clear that this is an ongoing dilemma that is decades and even centuries old. It also is one that doesn't have a clear answer, even after humanity has grappled with repeated waves of past scientific advancement.

"I don't think it offers any easy answers," Nolan said, referring to the story addressed in his film. "It is a cautionary tale. It shows the dangers. The emergence of new technologies is quite often accompanied by a sense of dread about where that might lead."

The conversation surrounding AI hasn't been this loud in years. While it is clearly a necessary debate, it doesn't change the fact that these technologies are progressing quickly. If we're to learn any lesson from "Oppenheimer," the takeaway has to be to ask the tough questions before, not after, we've felt the consequences. The challenge is doing that effectively on a global scale.