Could AI Evolve Into Terminator's Skynet In Our Lifetime? Our Expert Explains

Artificial intelligence has been advancing in leaps and bounds lately — leading many to wonder where its rapid developments are leading to exactly. Some people worry about the constant flurry of AI-themed products and how they handle personal data. Increasingly realistic deepfake technology (and the image rights issues that come with it) were among SAG-AFTRA's major concerns during the actors' union's 2023 strike. Artists have also spoken out against AI image generators for using their work as a basis for AI's recreations.

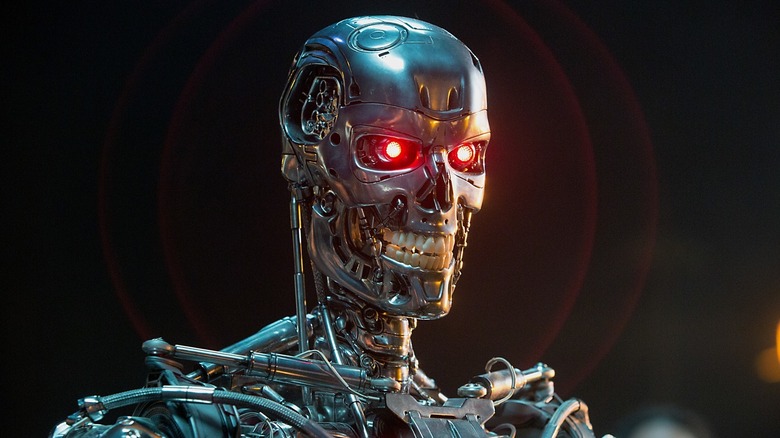

But we've all seen enough sci-fi movies to know what else humans fret about with AI: What do these systems think of us? And, of course, will they in time replace us? Regardless of how likely it is, the prospect of AI turning against mankind posits an existential threat on par with any other apocalyptic scenario. The idea of an AI-fueled doomsday is unnerving, but how far are we from a Skynet-style artificial intelligence that could cause such destruction? Would this even interest AI, or is it more of a human idea placed upon their circuit boards?

To investigate this further, Looper asked AI expert Dev Nag, CEO and Founder of QueryPal what we should be concerned about with AI, and it turns out that robots gaining sentience is fairly low on the list of woes and a prospect that won't happen anytime soon.

Movies love an evil AI

First, let's unpack why these fears of robotic overlords even exist in the first place. The answer? Pop culture tends to portray powerful AI characters as villains because it makes for an intriguing and humanist story — something man-made overriding their maker. Of course, the poster entity for artificial intelligence that wants to eradicate all humanity is Skynet from the "Terminator" franchise. So, what happens there? Well, the sentient neural net-based AI dislikes human scientists' attempts to shut it down so much that it retaliates with a nuclear apocalypse. Yikes!

However, the "Terminator" franchise's overarching villain is just one of the many AI baddies who have turned against humans. HAL 9000 from Stanley Kubrick's "2001: A Space Odyssey" is a well-known example of the genre. "The Matrix" films are all about humanity's borderline hopeless struggle against sentient machines. The Marvel Cinematic Universe has explored the theme with Ultron (James Spader) from "Avengers: Age of Ultron," while "Mission Impossible: Dead Reckoning — Part One" introduces the Entity, a rogue AI that poses a huge threat to cyber-security and Ethan Hunt (Tom Cruise). Granted, not every artificial intelligence in fiction is villainous, but the entertainment industry certainly seems to like iconic, world-ending AI antagonists.

AI sentience can be difficult to determine

Technological concerns and pop culture depictions aside, there's no denying that artificial intelligence is developing rapidly. AI expert Dev Nag delved more into the technology's recent developments, stating: "Sentience is a very long way off for AI."

But before you breathe a sigh of relief, there's a caveat here. As Nag explained it, sentience is a difficult concept that can't be readily measured when it comes to artificial intelligence. "Remember that we still don't really have a good external way to determine sentience, and even the most advanced Generative AI models are still very poor at (what is called) planning and deductive reasoning, much less sentience," he said. "We don't know if someone is having rich, subjective experiences, or is simply reporting them; after all, chatbots have been 'faking' subjective experiences for decades now, long before ChatGPT."

As such, the situation is not only complicated but incredibly difficult to monitor — to the point that humans might not even recognize an artificial intelligence that has become sentient. "What's scary about this is that we might never know, from the outside, when machines pass from the appearance of sentience to the real thing," Nag said. "It's an invisible event horizon."

AI can be dangerous even without evolving to Skynet levels

Still, there are other stages of AI to pay attention to as well. "In the realm of science fiction, the drama of AI is almost always depicted in moving up the three levels from tool to autonomous agent to full sentience," Nag said. "The drama comes both from how this brings increased danger as AI diverges in its goals from ours, but also in how we understand ourselves by watching deeper reconstructions of what makes us human."

What exactly do these levels mean and what phase of AI are we at currently? Well, really, we're at the start of it — in the "tool" zone. The tool level, Nag explained, means an AI that may be capable, but still controlled by humans — like Paul Bettany's J.A.R.V.I.S. in the Marvel Cinematic Universe. An autonomous agent is an AI that has an extent of freedom but still operates within certain parameters — think Data (Brent Spiner) in "Star Trek: The Next Generation." Full sentience is essentially what J.A.R.V.I.S. attains after becoming Vision, operating outside of original programming to be as contradictory and surprising as the human experience.

That last level is what would apply to a Skynet-style scenario, but as Nag pointed out, AI poses far more immediate concerns. "In reality, the initial danger of AI won't come from the last stage of AI (sentience), but from the first (tool)," Nag said, giving an example of a Hong Kong financial worker who was tricked into paying out $25.6 million with an elaborate deepfake conference call hoax. "This is the danger that will define the next few years, not anthropomorphized supervillain AI (yet)."